Today, I aim to analyze an article penned by Sam Altman a few years ago. We are already witnessing the impacts of the technological revolution he described, and we are nearing the initial milestone he mentioned.

It's important to note that the analysis that follows represents my personal viewpoint and should be considered with a degree of skepticism. My primary objective is to gain a deeper understanding of the article and share my insights with you. Additionally, I aspire to translate the article into Greek to make it accessible to the Greek-speaking community.

Please be aware that the article was authored in 2021, and the world has undergone significant changes since then. You can read the original article at https://moores.samaltman.com/.

For those who may not be familiar with Sam Altman, he serves as the CEO of OpenAI and is a celebrated entrepreneur and partner at Y Combinator. I hold respect for him due to his intellect and contributions to the field. OpenAI, a leading entity in artificial intelligence research, stands at the forefront of a domain poised to transform our world.

Interestingly, in November 2023, Sam Altman experienced an unprecedented situation: he was dismissed from OpenAI, only to be rehired a few days later. This sequence of events is sure to become a subject of study in the future. While there is much speculation surrounding the circumstances of his departure and return, my focus remains on the article he wrote, which appears to serve as a foundational manifesto for the new era he envisioned.

Intro

My work at OpenAI reminds me every day about the magnitude of the socioeconomic change that is coming sooner than most people believe. Software that can think and learn will do more and more of the work that people now do. Even more power will shift from labor to capital. If public policy doesn’t adapt accordingly, most people will end up worse off than they are today. [...]

In the next five years, computer programs that can think will read legal documents and give medical advice. In the next decade, they will do assembly-line work and maybe even become companions. And in the decades after that, they will do almost everything, including making new scientific discoveries that will expand our concept of “everything.”

Sam Altman begins immediately with a striking declaration, showcasing his willingness to forecast the future without fear of error. He identifies the next five years, leading up to 2025, as the first significant benchmark. We are already observing the effects of the technological revolution he outlines, with reports emerging about AI applications in the legal and medical fields. Thus, we are approaching the initial milestone he highlighted.

This technological revolution is unstoppable. And a recursive loop of innovation, as these smart machines themselves help us make smarter machines, will accelerate the revolution’s pace. Three crucial consequences follow:

- This revolution will create phenomenal wealth. The price of many kinds of labor (which drives the costs of goods and services) will fall toward zero once sufficiently powerful AI “joins the workforce.”

- The world will change so rapidly and drastically that an equally drastic change in policy will be needed to distribute this wealth and enable more people to pursue the life they want.

- If we get both of these right, we can improve the standard of living for people more than we ever have before.

[...] Policy plans that don’t account for this imminent transformation will fail for the same reason that the organizing principles of pre-agrarian or feudal societies would fail today.

The cycle of continuous innovation that Sam Altman discusses is actively unfolding. We are observing the rapid advancement of AI technologies, with the rate of progress quickening. The outcomes he predicts are starting to take shape, evidenced by the decreasing cost of labor and the growing urgency for significant policy reforms. The mere introduction of the article is sufficient to provoke thought about the future and the impending transformations.

Just a week ago, OpenAI announced Sora, an AI model capable of generating realistic videos from textual instructions. This development marks a significant stride toward the Artificial General Intelligence (AGI) that Sam Altman envisions.

The AI Revolution

On a zoomed-out time scale, technological progress follows an exponential curve. Compare how the world looked 15 years ago (no smartphones, really), 150 years ago (no combustion engine, no home electricity), 1,500 years ago (no industrial machines), and 15,000 years ago (no agriculture). [...]

The technological progress we make in the next 100 years will be far larger than all we’ve made since we first controlled fire and invented the wheel. We have already built AI systems that can learn and do useful things. They are still primitive, but the trendlines are clear.

The concept of exponential technological progress is profoundly challenging to grasp, primarily because the human mind is not inherently equipped to understand exponential growth. This lack of comprehension is a key reason why many people struggle to accurately predict the future.

My personal apprehension stems from our current reluctance to prepare for a future where the AI revolution will transform our lives in unexpected and novel ways. This upcoming transformation is akin to, yet fundamentally distinct from, the socio-economic upheaval brought about by the industrial revolution.

However, even if we disregard the distant revolution of 200 years ago, we have recently experienced a global crisis that demanded swift and decisive action. The COVID-19 pandemic demonstrated our capacity for rapid adaptation in response to pressing challenges. It has shown that we possess the resilience to adjust to new realities, a trait we must harness to ready ourselves for the impending AI revolution.

Moore's Law for Everything

[...] The best way to increase societal wealth is to decrease the cost of goods, from food to video games. Technology will rapidly drive that decline in many categories. Consider the example of semiconductors and Moore’s Law: for decades, chips became twice as powerful for the same price about every two years. [...]

Moore's Law, a principle synonymous with the swift progression of technology, exemplifies the exponential increase in computing capabilities. This law has played a pivotal role in reducing the costs of goods and services, and it holds promise for continuing this trend into the future.

Sam Altman extends the principle of Moore's Law to encompass exponential growth across all facets of life, proposing that the costs of goods and services will diminish exponentially. This premise foretells a future where affordability is vastly improved.

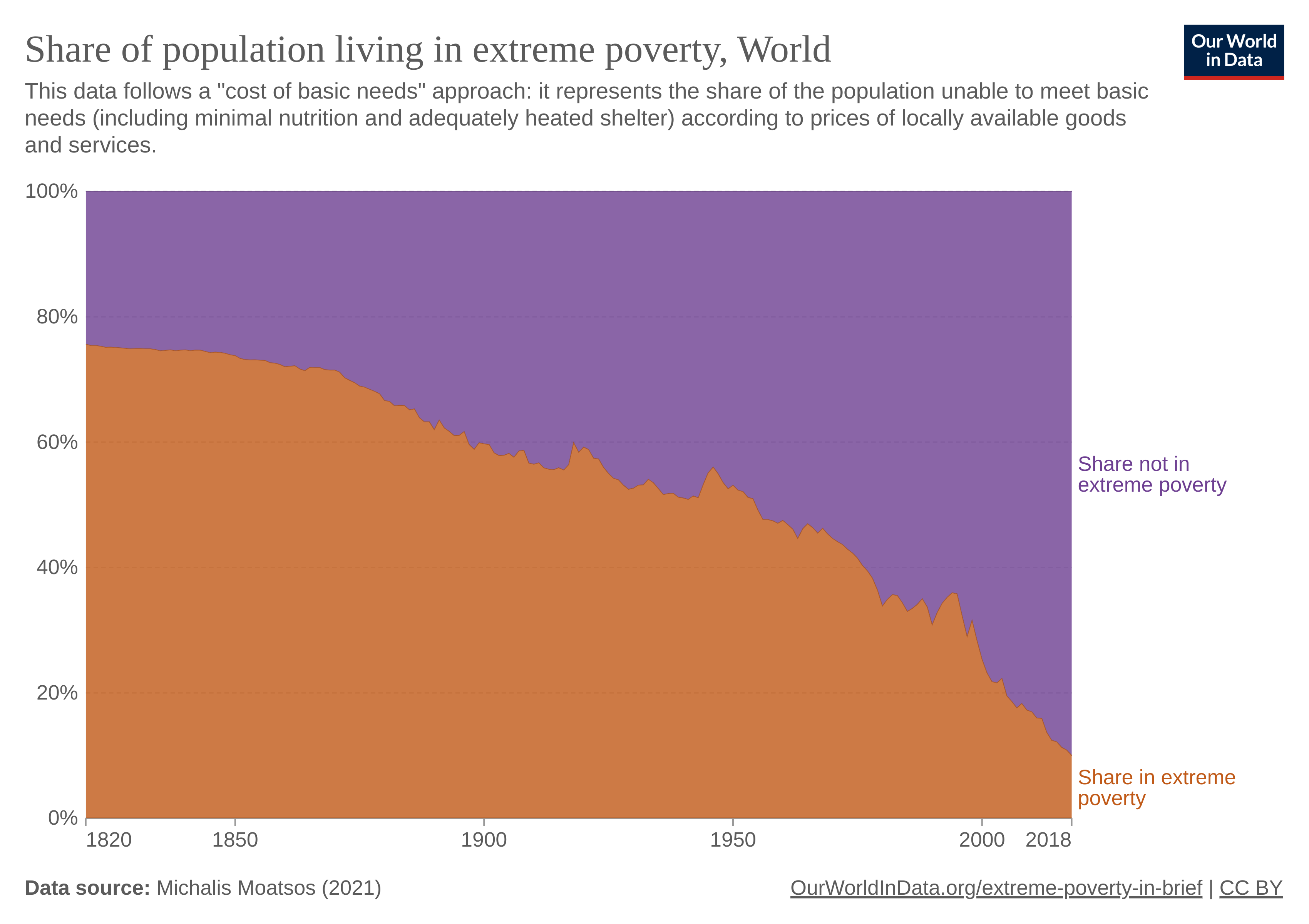

Exploring the reduction of poverty over the past five decades reveals a significant decline in the costs of goods and services. This pattern is expected to persist, potentially correlating with the exponential advancement of technology, further driving down costs and enhancing accessibility.

Taken from Our World in Data

AI will lower the cost of goods and services, because labor is the driving cost at many levels of the supply chain. If robots can build a house on land you already own from natural resources mined and refined onsite, using solar power, the cost of building that house is close to the cost to rent the robots. And if those robots are made by other robots, the cost to rent them will be much less than it was when humans made them.

Similarly, we can imagine AI doctors that can diagnose health problems better than any human, and AI teachers that can diagnose and explain exactly what a student doesn’t understand.

We are starting to witness AI's potential to transform the labor market significantly. As AI reduces labor costs, the prices of goods and services are expected to fall. This shift will make products and services more accessible and affordable, but it also heralds a future where numerous jobs may be automated by AI.

“Moore’s Law for everything” should be the rallying cry of a generation whose members can’t afford what they want. It sounds utopian, but it’s something technology can deliver (and in some cases already has). Imagine a world where, for decades, everything–housing, education, food, clothing, etc.–became half as expensive every two years. [...]

Consider performing this exercise: Reflect on the price of an item you purchased a decade ago and compare it with its cost today. You will likely observe a substantial reduction in price.

Alternatively, think of a car you wish to purchase and imagine halving its price every two years. How long will it take before the car becomes affordable for you?

Capitalism for Everyone

A stable economic system requires two components: growth and inclusivity. Economic growth matters because most people want their lives to improve every year. In a zero-sum world, one with no or very little growth, democracy can become antagonistic as people seek to vote money away from each other. What follows from that antagonism is distrust and polarization. In a high-growth world the dogfights can be far fewer, because it’s much easier for everyone to win.

Economic inclusivity means everyone having a reasonable opportunity to get the resources they need to live the life they want. Economic inclusivity matters because it’s fair, produces a stable society, and can create the largest slices of pie for the most people. As a side benefit, it produces more growth.

Capitalism is a powerful engine of economic growth because it rewards people for investing in assets that generate value over time, which is an effective incentive system for creating and distributing technological gains. But the price of progress in capitalism is inequality.

Some inequality is ok–in fact, it’s critical, as shown by all systems that have tried to be perfectly equal–but a society that does not offer sufficient equality of opportunity for everyone to advance is not a society that will last.

This concepts are not new, but they are presented in a way that is both compelling and thought-provoking. However I would like to add a few events that have occurred since the article was written that may be important to consider.

OpenAI was founded in 2015 as a non-profit organization, but in 2019 it was restructured as a for-profit company. This change was met with criticism, as it was seen as a move to prioritize profits over the organization's original mission. This shift in focus may have implications for the future of AI and its impact on society.

Here is an extracted table of investments from 33rdsquare

| Year | Event | Funding Amount | Leading Investors |

|---|---|---|---|

| 2015 | OpenAI kicks off with seed funding as a non-profit | $1 mil. | Sam Altman, Jessica Livingston, Peter Thiel... |

| 2016 | Series A funding round | $116 mil. | Sam Altman |

| 2019 | Raises funds, becoming a ‘unicorn’ startup | $1 bil. | Microsoft |

| 2020 | Series C funding round | $200 mil. | Tencent and others |

| 2021 | Series D funding round | $865.8 mil. | Existing investors |

| 2022 | Raises funds at $29 billion valuation | $300 mil. | VC firms |

| 2023 | Microsoft invests significantly across two tranches | > $10 bil. | Microsoft |

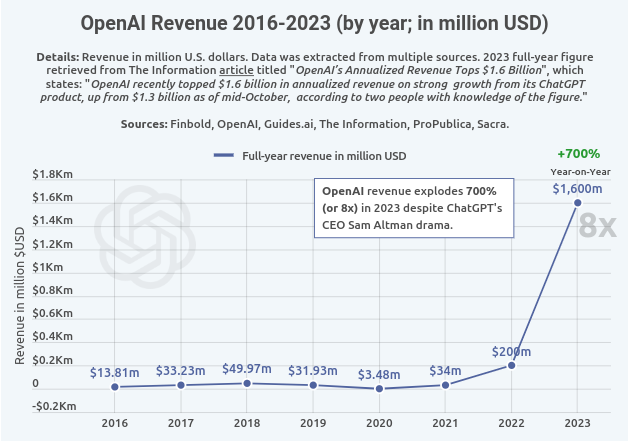

And a graph of the annual revenue of OpenAI from 2016 to 2023:

One could criticize the shift from a non-profit to a for-profit organization as a move that prioritizes profits over the organization's original mission. It seems that Altman talks from a position of privilege, and his ideas may not be applicable to everyone. The shift in focus may have implications.

The traditional way to address inequality has been by progressively taxing income. For a variety of reasons, that hasn’t worked very well. It will work much, much worse in the future. While people will still have jobs, many of those jobs won’t be ones that create a lot of economic value in the way we think of value today. As AI produces most of the world’s basic goods and services, people will be freed up to spend more time with people they care about, care for people, appreciate art and nature, or work toward social good.

We should therefore focus on taxing capital rather than labor, and we should use these taxes as an opportunity to directly distribute ownership and wealth to citizens. In other words, the best way to improve capitalism is to enable everyone to benefit from it directly as an equity owner. This is not a new idea, but it will be newly feasible as AI grows more powerful, because there will be dramatically more wealth to go around. The two dominant sources of wealth will be 1) companies, particularly ones that make use of AI, and 2) land, which has a fixed supply.

Reading the news and living through the events of the past few years, it is clear that the traditional way of addressing inequality has not worked well. The pandemic has exacerbated existing inequalities, and the traditional methods of addressing them have not been effective. The idea of taxing capital rather than labor is an interesting one, and it may be worth exploring further.

As long as the country keeps doing better, every citizen would get more money from the Fund every year (on average; there will still be economic cycles). Every citizen would therefore increasingly partake of the freedoms, powers, autonomies, and opportunities that come with economic self-determination. Poverty would be greatly reduced and many more people would have a shot at the life they want.

[...]

In a world where everyone benefits from capitalism as an owner, the collective focus will be on making the world “more good” instead of “less bad.” These approaches are more different than they seem, and society does much better when it focuses on the former. Simply put, more good means optimizing for making the pie as large as possible, and less bad means dividing the pie up as fairly as possible. Both can increase people’s standard of living once, but continuous growth only happens when the pie grows.

Maybe is just an internal fear, living in a country that has been in a continuous economic crisis for the past 12 years, but I am not sure that the collective focus will be on making the world "more good" instead of "less bad". Altman focuses on the positive aspects of the future in his own country, but he does not take into account the global perspective. The world is not a single country, and the future he envisions may not be applicable to everyone. For example, forces that are beyond the control of any single country, such as climate change, may have a significant impact on the future.

Additionally, only the extremely rich countries will be able to have a way to invest in AI and benefit from it. The rest of the world will be just consumers of the products and services that AI will produce. This will create a new kind of inequality at a global level.

Implementation and Troubleshooting

[...]

We’d need to design the system to prevent people from consistently voting themselves more money. A constitutional amendment delineating the allowable ranges of the tax would be a strong safeguard. It is important that the tax not be so large that it stifles growth–for example, the tax on companies must be much smaller than their average growth rate.

We’d also need a robust system for quantifying the actual value of land. One way would be with a corps of powerful federal assessors. Another would be to let local governments do the assessing, as they now do to determine property taxes. They would continue to receive local taxes using the same assessed value. However, if a certain percentage of sales in a jurisdiction in any given year falls too far above or below the local government’s estimate of the property’s values, then all the other properties in their jurisdiction would be reassessed up or down. [...]

In this chapter Altman discusses the implementation of solution called the American Equity Fund. The idea is to tax capital rather than labor, and to use these taxes as an opportunity to directly distribute ownership and wealth to citizens. The two dominant sources of wealth will be companies, particularly ones that make use of AI, and land, which has a fixed supply.

We have rumors that many billionaires are already buying land in large quantities, and this may be a sign that they are preparing for the future Altman envisions.

Finally, we couldn’t let people borrow against, sell, or otherwise pledge their future Fund distributions, or we won’t really solve the problem of fairly distributing wealth over time. The government can simply make such transactions unenforceable.

It is amazing to see the word "simple" in the same sentence as "government". It is not simple to make such transactions unenforceable, and it is not simple to solve the problem of fairly distributing wealth over time.

Shifting to the New System

A great future isn’t complicated: we need technology to create more wealth, and policy to fairly distribute it. Everything necessary will be cheap, and everyone will have enough money to be able to afford it. As this system will be enormously popular, policymakers who embrace it early will be rewarded: they will themselves become enormously popular.

[...]

The changes coming are unstoppable. If we embrace them and plan for them, we can use them to create a much fairer, happier, and more prosperous society. The future can be almost unimaginably great.

The conclusion of the article is optimistic, and it is clear that the changes coming are unstoppable. Sam believe it back in 2021, and many users of the current primitive AI products (ChatGPT) can see it now. The future can be almost unimaginably change, and it is up to us to embrace it and plan for it.

In a future where AI produces most of the world’s basic goods and services, we could see things that now seem expensive become more than affordable. Imagine a world where energy is basically free, climate change is solved, and everyone has access to the best healthcare. This is the future that Sam Altman envisions, and sure I hope it will come true.

Acknowledgments

In the article's footer, Sam Altman extends his gratitude to individuals who reviewed drafts of the article and contributed to its design. I found it intriguing to explore who these people are and what their roles were at the time the article was composed.

Since the identities of these individuals have not been disclosed, I have tried to identify them using their LinkedIn profiles or other publicly available information. Due to inevitable similarities, I selected the person whose profile and background appeared to align most closely with Altman's path. There may be inaccuracies in this identification, and I welcome any corrections or additional information.

Below is the final list, accompanied by a brief overview of their professional activities:

- Steven Adler: Product Manager & Product Safety Lead at OpenAI. Built and scaled OpenAI's Product Safety team to ~10 members to empower customer to serve AI products responsibly

- Daniela Amodei: Former OpenAI's VP of Safety and Policy and currently (and at the time of article published) President at Anthropic. Anthropic is also an AI research lab founded by former OpenAI members.

- Adam Baybutt: He is a researcher in econometrics, empirical asset pricing, and machine learning. It seems he had collaborated with Sam Altman to articulate directions for crypto research and startups.

- Chris Beiser: He was Product Designer at reduct.video and in May of 2023 he joined the Midjourney team. Midjourney is one of the first companies to build an AI model (derivative from Stable Diffusion) capable of generating realistic images from textual instructions.

- Jack Clark: He was the Policy Director at OpenAI until 2020 and he Co-founded Anthropic at the time of the article.

- Ryan Cohen: He is known as the largest individual shareholder of Apple Inc. and the founder of Chewy. At 2020 he disclosed a 9.8% stake in GameStop. Recently appointed as the CEO of GameStop.

- Tyler Cowen: He is an economist and the Holbert L. Harris Chair of Economics at George Mason University. He is also the Director of the Mercatus Center at George Mason University. He writes the "Economic Scene" column for The New York Times and since July 2016 has been a regular opinion columnist at Bloomberg Opinion.

- Matt Danzeisen: He is the partner of famous American entrepreneur, venture capitalist and author Peter Andreas Thiel. It is said to be serving as a portfolio manager at the Thiel capital.

- Steve Dowling: Coming from Apple, he was the Vice President of Communications at OpenAI at the time of the article.

- Tad Friend: He is a staff writer at The New Yorker since 1998, covering a wide range of topics from Silicon Valley and celebrities to impactful pieces like his work on the Golden Gate Bridge suicides, Jumpers which influenced both film and music.

- Lachy Groom: Previously head of Stripe issuing, core payments product. Now an individual investor in several companies. Stripe is a famous company that builds payment infrastructure for applications.

- Chris Hallacy: Member of the OpenAI technical staff from it's early days to this day.

- Reid Hoffman: From Apple to PayPal to LinkedIn, Reid Hoffman has been involved in the creation of several successful companies. At the time of the article, he was a founding investor at OpenAI.

- Ingmar Kanitscheider: He is a research scientist at OpenAI from it's early days to this day. He worked on optimizations for the GPT4 model.

- Oleg Klimov: Another ex-OpenAI member, he was in the technical staff until a few months after the article was published. Currently founder at Refact.ai a direct competitor of GitHub Copilot.

- Matt Knight: He is the Head of Security at OpenAI from 2020 to this day.

- Aris Konstantinidis: Worked at the Business Operations team at OpenAI. Currently, he is the co-founder of a Cohere, a company that builds similar AI products to OpenAI.

- Andrew Kortina: He is the co-founder of Venmo and Fin.com. (I am not sure if it's him)

- Matt Krisiloff: He was on of the founding members of the OpenAI team. He was Director of Y Combinator Research. Currently, he is the CEO and co-founder of Conception, a company that turning stem cells into human eggs

- Scott Krisiloff: He was listed as an advisor to Helion for 2 years before the article was published. Helion is building the fusion power plant. Just after the article was published he took the role of Chief Business Officer and note that he negotiated and signed the world's first fusion power PPA with Microsoft.

- John Luttig: He has the role of Principal at Founders Fund, a venture capital firm with investments in Airbnb, Armada, DeepMind, Lyft, Facebook, Flexport, Palantir Technologies, SpaceX, Spotify, Stripe, Wish, Neuralink, Nubank, and Twilio.

- Erik Madsen: I am not entirely certain it's him, as I cannot find any information at the time the article was published that links him to Altman. However, I have a strong intuition that he is the Associate Professor of Economics at New York University.

- Preston McAfee: He is an Economist worked at Google and Microsoft. He is a Distinguished Scientist at Google from 2020 to this day.

- Luke Miles: Having originated from Y Combinator, he served as a Software Engineer at Stripe, and in 2020, at Byte (which was later renamed Clash). He went on to establish Context, a web3 company, which successfully raised $19.5 million in funding from several investors, including Sam Altman, among others.

- Arvind Neelakantan: He is to the present day member of the OpenAI technical staff. He worked at Google Research Before joining OpenAI. He one of the authors of the Language Models are Few-Shot Learners, a paper that introduced the GPT-3 model.

- David Oates: He is the CEO of Curtsy, a company that builds a marketplace for thrifting. I believe he has connections with Sam Altman as he is located in San Francisco and has a background in technology and entrepreneurship.

- Cullen O’Keefe: He was Counsel for Policy and Governance at OpenAI and he is currently Research Lead at Policy.

- Alethea Power: She is a member of the OpenAI technical staff from 2020 to last year. She is one of the authors of the Evaluating Large Language Models Trained on Code paper that introduced the Codex model. Codex is powering GitHub Copilot.

- Raul Puri: He was at NVIDIA as a Deep Learning Research Scientist before joining OpenAI at 2020. He is currently a Research Scientist at OpenAI. He is alse one of the authors of the Evaluating Large Language Models Trained on Code paper.

- Ilya Sutskever: He is the co-founder and Chief Scientist at OpenAI. He had a significant role in the departure of Sam Altman from the company. Sutskever's disagreement with Altman over the pace of technology development was a key factor in this decision.

- Luke Walsh: I can not decide who this person is from all the Walshes on public platforms.

- Caleb Watney: He the Co-Funder of IFP, a think tank that focuses on the future of work and the impact of technology on society.

- Wojchiech Zaremba: He is a Polish computer scientist, a founding team member of OpenAI, where he leads both the Codex research and language teams.

- Gregory Koberger: He is the founder of ReadMe, a company that builds developer hubs. He is also a member from Altman for the design of the article page.

This article was generated with the assistance of AI and refined using proofing tools. While AI technologies were used, the content and ideas expressed in this article are the result of human curation and authorship.

Read more about this topic at: Importance is All You Need