This will be another technical post about how I implemented a simple ranking algorithm for my pet project News. However I will try to keep it simple and understandable for non tech savvy persons.

The needed feature was a list of hot news to show at the home page. The definition of what is was not clear at the time, but I knew that I needed to use social media data to calculate it. I will explain how I did it.

A few things I did know was that an article should not be older than three days and that I had to calculate a score that should decay over time.

For this, user preferences and other factors are not taken into account and considered out of scope. Those are more complex and I will tackle them in the future.

Gathering Data through Social Media Networks

I decided to harness the power of social media networks like Reddit and Hacker News to understand what constitutes a 'hot' article. Through scrupulous data collection from these platforms, I started getting clearer insights. Each network has a score for the post and I will store that in the database.

But first things first: I needed to fetch the data from those platforms. News articles are essentially links to the original news sources, so I needed to find which articles from the database have been shared on social. A simple thing to do was to use the search function of each platform. Of course, I did use a queue to keep the hosting server from blowing up.

For the Hacker News the url is

https://hn.algolia.com/api/v1/search?tags=story&query=

This will return a json object with a hits array. If the array is empty, then the article was not shared on Hacker News. If the array is not empty, then I will store the points field in our database.

Note that will store every share of the link, so if the article was shared multiple times, it will have multiple entries in our database. This is not a problem, I will just average the points for each article.

For Reddit, the url is

https://www.reddit.com/search.json?q=

It will return a json object with a data.children array. If the array is empty, then the article was not shared on reddit. If the array is not empty, then I will store the data.children.data.score field in our database.

With this data, we will have a table like this and may start working on the algorithm.

| article_id | type | score |

|---|---|---|

| 1 | hackernews | 10 |

| 1 | 20 | |

| 2 | hackernews | 5 |

| 2 | 10 | |

| 3 | hackernews | 17 |

Find an Ranking Algorithm in the Wild

Lets try not reinvent the wheel and find an algorithm that is already working. After some research, I found that reddit has a hot algorithm. A minor issue is that it is written in pyrex and I am using NestJS and Typescript.

I could translate it by hand, but that would be so 2021 🤡. So I will use Copilot. It has a preview feature and the labs that can translate code languages. I did prefer that over ChatGPT as it is specially trained for code and tends to be more accurate.

As my father used to tell me: Use the right tool for the job. If you don't have it, hack it. (The greek use the word patent/πατέντα as just-in-time invention)

The part from Reddit pyrex code from the archived repository that we are interested is:

cpdef double _hot(long ups, long downs, double date):

"""The hot formula. Should match the equivalent function in postgres."""

s = score(ups, downs)

order = log10(max(abs(s), 1))

if s > 0:

sign = 1

elif s < 0:

sign = -1

else:

sign = 0

seconds = date - 1134028003

return round(sign * order + seconds / 45000, 7)

I translate in typescript and I got a good starting point. The next step is to review and fix it as we always have to do when we generate code with LLMs. For explain of how it works, ChatGPT can be very helpful. For example I did need to have a conversation of what is the 45000 number and how it worked.

Eventually I end up with the following code:

calcScore(article: Article) {

// I set some weight to limit the impact of each social network

// I know that hackernews have more technical news

const weights = { reddit: 1, hackernews: .7 }

// I calculate the average of the score of each social network

const average = article.socials.reduce((acc, social) => {

return acc + (social.score * weights[social.name])

}, 0) / article.socials.length

// This is the main algorithm.

// I calculate the order of magnitude of the average using log10

const order = Math.log10(Math.max(Math.abs(average),1))

// I calculate the seconds since the epoch.

// I use 2023 as the epoch because I want to have a positive number

// and our last three days are in 2023

const epoch = new Date(2023, 1, 1)

// The 45000 is a magic number that reddit uses

// I use it too as is. If I lower it, the score will be higher

// and if I increase it, the score will be lower

// This will change of how I favor the age of the article

const magic = 45000

const seconds =

differenceInSeconds(article.publishedAt, epoch) / magic

// I calculate the score and I keep only 7 digits

const score = Math.round((order + seconds) * 100000)

return { average, order, seconds, score}

}

The Results

Before I use it in the home page I need to see if it works. I created a debug api that will show us the score of each article as well the other values. Also I include other of the article in order to have a better understanding of the score.

In this final state there is no deterministic way to know if the score is good or not. I can only use our intuition and preference looking the results. I can also tweak the algorithm to better fit our needs.

The debug endpoint is not listed anywhere in the site, as I use it only for testing purposes. But if you made it this far, you can find it at /debug/hot.

An example response of the results is:

[

{

"id": "7c59e519-16c6-42fa-b265-67569c4c2fef",

"title": "Microsoft documents leak new Bethesda games, including an Oblivion remaster",

"publishedAt": "2023-09-19T07:10:06.749Z",

"calc": {

"average": 463.175,

"order": 2.66574511018601,

"seconds": 442.1734666666667,

"score": 44483921

}

},

{

"id": "6ff8ed99-fe81-4839-a4f1-d356e23bdb66",

"title": "Google quietly raised ad prices to boost search revenue, says executive",

"publishedAt": "2023-09-19T14:01:01.631Z",

"calc": {

"average": 79.1,

"order": 1.8981764834976764,

"seconds": 442.72135555555553,

"score": 44461953

}

},

{

"id": "0efd703d-1399-431d-85bc-ff116ca30060",

"title": "This is Microsoft’s new disc-less Xbox Series X design with a new controller",

"publishedAt": "2023-09-19T05:45:05.849Z",

"calc": {

"average": 276.4230769230769,

"order": 2.4415742968859298,

"seconds": 442.0601111111111,

"score": 44450169

}

},

...

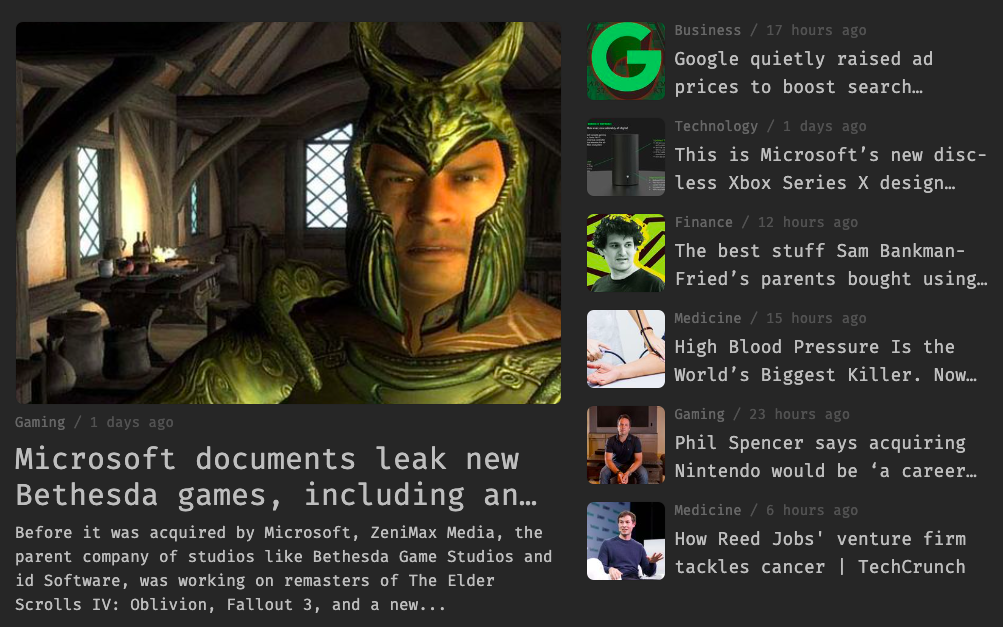

When I was ready, it was a simple matter of using the algorithm in the home page. I used the score to sort the articles and limit the results to 7 articles

The result as it is at home is:

In Conclusion

Building a ranking algorithm may sound daunting, especially if you aren't very tech-savvy. But with a good understanding of your goals, the right data, an appropriate algorithm and tools to aid in understanding and translation, it becomes a lot simpler.

Whether you're an aspiring coder, a tech enthusiast, or simply someone interested in how things work behind the scenes, I hope this blog demystified ranking algorithms for you.

If you have any questions or comments about this post, please feel free to contact me. Also I you need an ranking algorithm for your project, I will be happy to help you.

This article was generated with the assistance of AI and refined using proofing tools. While AI technologies were used, the content and ideas expressed in this article are the result of human curation and authorship.

Read more about this topic at: Importance is All You Need